The 2e30 FLOPS Future

The era of muddling through is over

A new benchmark is set to redefine everything in AI: 2e30 FLOPS (floating point operations per second) models by 2029-2030.

This the equivalent computation power required for training the largest AI model that humanity has every conceived of.

This figure (or similar) has been mentioned by several industry experts, including Semianalysis’s Dylan Patel in his conversation with Dwarkesh Patel and the recent Epoch AI blog post ‘Can AI Scaling Continue Through 2030?‘.

But what does this number really mean?

I argue 2e30 is more than just a number.

It’s a beacon for humanity to shift us out of our ‘muddle through‘ mindset.

Let's dive in.

“Whenever people think you can just muddle through, you’re probably set up for some kind of disaster.” - Peter Thiel

Scale of the leap

2e30 FLOPS perspective: 2e30 FLOPS represents 2 x 10^30 floating point operations per second. To put this in perspective, it's about 50,000 times more compute than what was used to train the Llama 3.1 405B model. This isn't just a incremental step, it's a giant leap in AI model scale.

GPUs: To train a single model at this scale, we would need approximately 5 million GPUs equivalent to today's H100s. This is a staggering number for a single training run, highlighting the immense resources that will be required for frontier AI models in the coming years. The biggest cluster today, built by Elon has about 100,000 GPUs. So that’s a 50x increase in 6 years.

Power: The power requirements for such a model would be enormous. We're looking at about 56 GW of power - roughly 2000x more than what Llama 3.1 405B required. This aligns with Epoch AI's projection that 2 to 45 GW of power could be available for geographically distributed training runs by 2030, though our estimate pushes beyond even this upper bound.

Data: A model of this scale would require an immense amount of training data. Epoch AI estimates a range of 400 trillion to 20 quadrillion effective tokens could be available by 2030. The 2e30 FLOPS model would likely need data at the higher end of this spectrum.

Training duration and infrastructure: Training such a model could take 3-6 months, potentially tripling current training durations. This would require distributed training across multiple data centers, pushing the boundaries of our current infrastructure capabilities.

Investment in trillions: The cost for developing and training a single 2e30 FLOPS model would be astronomical, likely in the 10s of billions of dollars, cumulatively 100s of billions of dollars between now and 2030. This aligns with projections of major tech companies spending around $1 trillion on frontier models over a 6-year period. That’s a $150 billion a year run rate and that is the lower end of the forecast. The highest capex in history so far has been Saudi Aramco at $50 billion in a year. We are looking at some hyperscalers spending that amount of money per year for a sustained period of 5-6 years, possibly even more.

It's crucial to understand that the 2e30 FLOPS figure represents a single model, likely to be pursued by individual hyperscalers.

This implies a future where multiple entities - including tech giants like Google, Microsoft, OpenAI, Anthropic, X, Meta as well as nation-states like the US and China - are each striving to build models at this scale.

“We find that training runs of 2e29 FLOP will likely be feasible by the end of this decade. In other words, by 2030 it will be very likely possible to train models that exceed GPT-4 in scale to the same degree that GPT-4 exceeds GPT-2 in scale.2 If pursued, we might see by the end of the decade advances in AI as drastic as the difference between the rudimentary text generation of GPT-2 in 2019 and the sophisticated problem-solving abilities of GPT-4 in 2023.” - Epoch AI

Does the AGI debate matter anymore?

The bottomline is that the 2e30 FLOPS model is feasible. Multiple private entities and soon sovereigns are racing towards getting there.

There are still lots of questions - chips, energy, capital, returns?

Yes, all of these are good questions.

But currently, Sundar, Mark and Satya are doing a Pascal’s wager.

Basically, betting on an AGI future is a safer bet than not believing.

The stakes are too high.

In my view, the AGI debate is essentially irrelevant at this point i.e. it really doesn’t matter if we will achieve AGI or not and how one defined AGI - we are headed there at hypersonic speed.

Here is an argument - we have already achieved human-level AI capabilities in many fields and that list will continue to grow bigger.

You shoot back, “but it still can’t solve the ARC dataset or till recently couldn’t count the number of r’s in ‘strawberry‘“.

That is true.

But then these models are chaotic in nature. Their powers are unevenly distributed.

Google DeepMind's silver medal performance at the 2024 International Maths Olympiad is a prime example of this.

Does it matter if someone get’s a gold medal in the Math’s Olympiad and doesn’t know how many r’s are in a strawberry?

It doesn’t as the intelligence that can solve maths problems will not be linear, it will falter at many things that most humans find easy.

However, it will be able to create new drugs and win Nobel prizes.

Alpha Fold won the Nobel, not Demis.

Maybe we have a very limited understanding of what intelligence is.

The question we should be focusing on is not whether we will reach AGI or human-level AI.

Instead, we need to shift our attention to how we can fully capitalise on this intelligence revolution.

It's time to move beyond digital. The transformation we require is beyond digital.

We need to embrace the "intelligence" transformation.

One needs to set the ground truth to really start any transformation.

2e30 models will be here in the blink of an eye. That is the baseline truth.

Ignore at your own peril.

There seems to be a widespread lack of urgency in addressing the implications and potential of AGI. As I said, it doesn’t matter.

This "sleeping at the wheel" and “muddling through“ mentality is dangerous and will lead to inequitable outcomes.

This is the point that I would like to drive through.

Putting 2e30 in Perspective

The world's GDP was about $100 trillion in 2022.

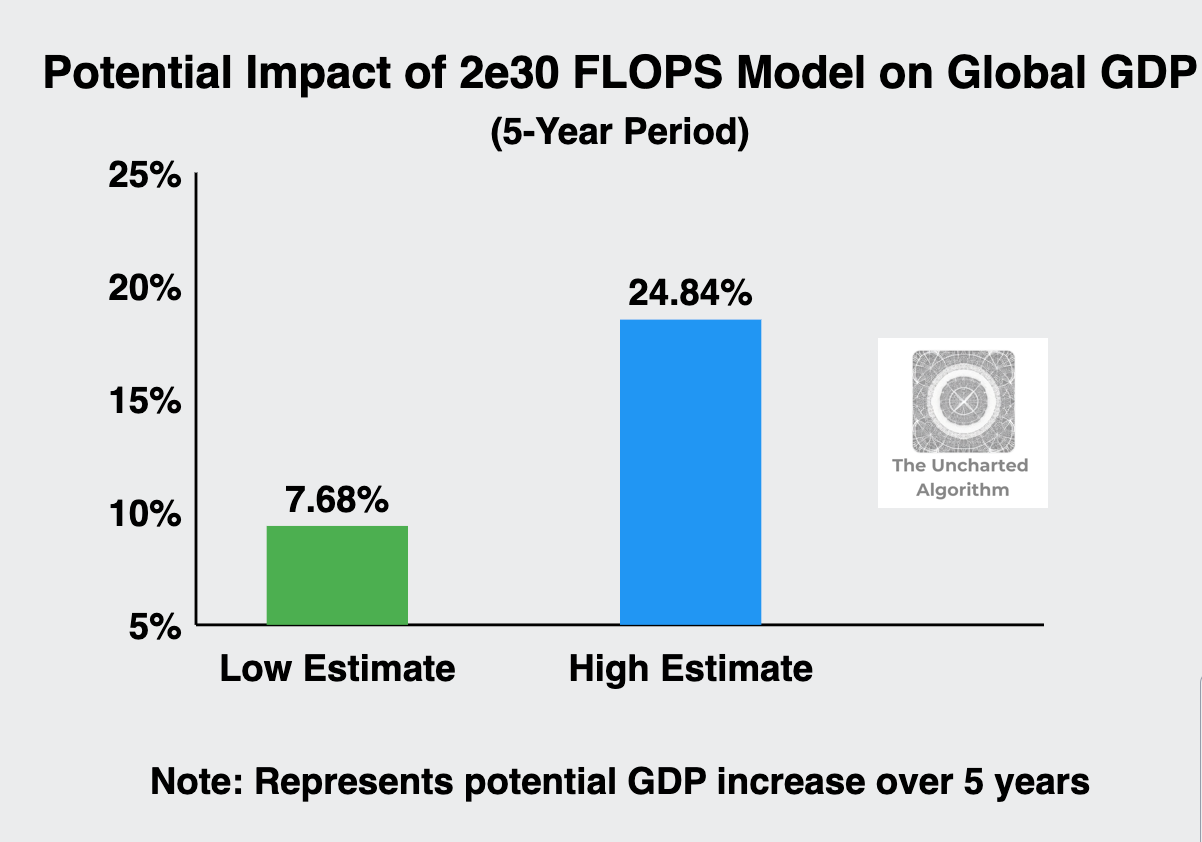

The computational power we are discussing could process data equivalent to analysing the entire global economy in all its intricacies. As per my estimate, the potential impact of a 2e30 FLOPS model on global GDP over a 5-year period could range from $7.68 trillion to $24.84 trillion.

This represents a potential GDP increase of 7.68% to 24.84% over 5 years, or an average annual GDP growth rate increase of 1.5% to 4.5%.

Get the blinders off

Despite the staggering implications, many in business, government, and society are still operating with blinders on.

We're about to witness multiple private companies i.e. hyperscalers achieve a 50,000-fold increase in AI computational power in just 5-6 years, yet our preparations and discussions often fail to grasp the true scale of this impending change.

Economy: How will the existence of multiple 2e30 FLOPS models reshape global economic structures and industry dynamics?

Geopolitics: What does it mean for global power dynamics when multiple companies and sovereign states possess AI capabilities of this magnitude?

Workforce: How do we prepare for a job market where AI can perform complex tasks across virtually all industries?

Innovation: What breakthroughs in science, medicine, and technology might be unlocked by multiple human-level AIs operating at this level?

Muddle through or build Dyson spheres

The world has been stuck in a state of technological stagnation.

We have been made to believe that using Microsoft Powerpoint, Word and Excel are the apex of human achievement for digital transformation.

Most companies exist and survive on these technologies and people have dedicated their lives to becoming experts.

Newsflash - these tools were invented 40 years ago and they suck.

The analogy would be a whole industry of expert ‘horse cart’ drivers before the motor car was invented.

Or a battery of experts in typewriting before word processors came into being (BTW - they all transitioned to the next thing without much of an issue)

Silicon Valley the epitome of innovation has spent the last 40 years building apps, getting us addicted to cat videos and optimising click rates.

Don’t even get me started on Apple.

We've been told to expect marginal improvements, to "muddle through."

That era ends now.

2e30 FLOPS by 2030 isn't incremental progress. It's a leap from 0 to 1.

Consider:

The last truly transformative technology was the internet.

AI at 2e30 FLOPS will dwarf that revolution.

We're not prepared for the magnitude of this shift.

Most people don't understand exponentials.

They see linear progress where exponential growth lurks.

The Kardashev scale isn't just about energy. It's about potential.

We're at 0.7. The jump to 1 and beyond requires a fundamental reimagining of civilisation.

Lets focus on accelerating intelligence and ascend the Kardashev scale. The sudden spurt of nuclear reactor projects by the hyperscalers should give you hints.

Elon's Starship caught by Mechazilla represents accelerated progress. It's not about making existing rockets better. It's about rethinking space transportation itself.

The vibe has shifted my friend.

If you didn’t feel that with the Starship 5 being caught by chopsticks, you were asleep at the wheel.

2e30 isn't about faster computations. It's about unlocking new futures.

The roadmap isn't linear. It's convergent:

AI solves problems we can't comprehend.

Space becomes accessible.

Energy abundance through Dyson spheres.

Interstellar species level unlocked.

Each step doesn't just build on the previous. It unlocks new ones.

And lets get rid of some misconceptions.

We're not in a world of scarcity, we are building towards a future of abundance.

AI won’t kill us but muddling through might.

To truly progress:

Governments must embrace long-term, ambitious projects.

Governments should cut bureaucracy and regulations.

Governments should let entrepreneurs cook without any bounds.

Companies should ask the question - does my product fit in this new future?

Companies should retain cracked employees that operate outside normal bounds, fire the rest.

Companies should fire everyone in strategy and hire a head of ‘vibe’ check.

This isn't about optimism or pessimism. It's about determination.

The future doesn't happen by itself. It must be created.

Let's build the future we deserve.