65 % Faster Deployment: Inside the Discreet Supply Networks Creating $2 B+ in Confidential AI-Infrastructure Value

Part 3 of 3 – The Critical Intelligence Series for AI-Infrastructure Investors

(Part 1 covered the gigawatt-scale power race; Part 2 dissected cooling bottlenecks. Part 3 explains how certain operators deploy compute almost two-thirds faster by combining confidential site acquisition, adaptive inventory networks, and permitting agility.)

While headlines focus on model parameters and token pricing, a parallel ecosystem of quiet deployments, layered ownership structures, and deliberately low-visibility capacity expansions is reshaping the physical layer of AI. Operators that master these tactics achieve ≈ 65 % faster build schedules, lower regulatory friction, and first rights to prime locations—advantages that translate directly into outsized infrastructure alpha.

Why “65 % faster” matters Baseline — A conventional hyperscale build from GPU purchase order to production power-on averages 40 weeks (≈ 280 days). By overlapping site prep, staging prefabricated power rooms, and warehousing GPUs in bonded facilities, adaptive inventory networks cut the cycle to 14 weeks (≈ 98 days). Δ = 40 wk − 14 wk = 26 wk → 26 / 40 ≈ 65 % time saved.

Result: a new AI cluster comes online nearly two-thirds sooner, giving months of uncontested model-training advantage.

Strategic Land Acquisition — Multi-Layered LLCs Masking Beneficial Ownership

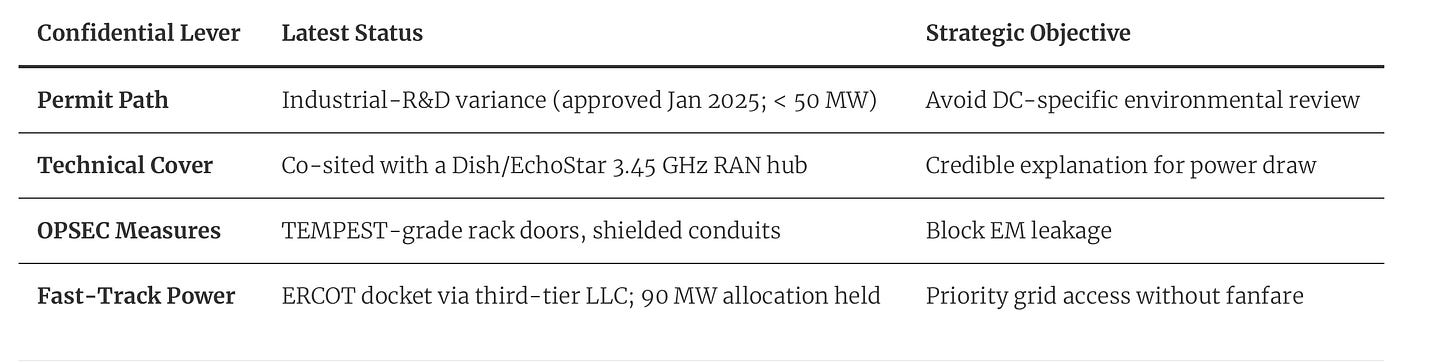

Texas Triangle (Midland-Odessa) – A modular edge-AI complex is rising under an industrial R&D permit, with military-grade EM shielding that suggests classified or highly sensitive workloads.

Wyoming–Idaho Corridor – Confidential entities have secured 200 + acres flanking newly energized 500 kV transmission segments, positioning ahead of future line completions.

Undisclosed Midwest campus – Bills of lading show immersion-cooling tanks inbound to multiple Great-Lakes counties at volumes that will reach ≈ 1 200 units / month by Q3 2025.

The common thread: organizations are locking up entire energy corridors not just building footprints before competitors recognize their value.

“Operators mastering adaptive inventory networks bring new AI clusters online nearly two-thirds faster, cutting deployment cycles from 40 weeks down to just 14."

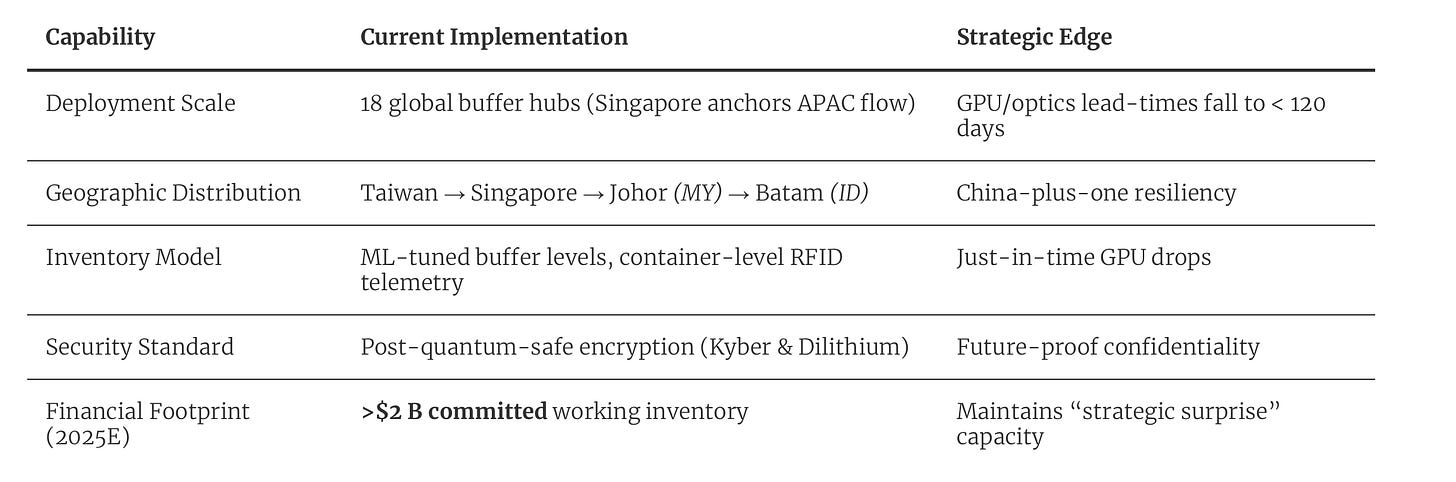

Adaptive Inventory Networks — Trimming Lead-Times by 65 %

These low-visibility logistics networks operate outside the standard distributor stack, absorbing tariff or export-control shocks without slipping a deployment schedule.

Regulatory-Classification Optimization — Working the Rulebook

Filing under industrial R&D (rather than “data center”) classifications sidesteps energy-use disclosure in several U.S. states.

Mid-band 3.45 GHz tower co-locations provide a plausible cover story for elevated power draws.

Staged permit filings keep each application below thresholds that trigger full environmental reviews, while substations are procured through separate LLCs.

In agricultural districts, “precision-ag research” designations unlock expedited water rights and tax abatements.

"Organizations are locking up entire energy corridors - not just building footprints -before competitors recognize their value."

Tariff Navigation — Cross-Border Engineering After Section 301 Escalations

NVIDIA / Foxconn – Final GPU-board assembly relocates to Chihuahua, MX, qualifying for zero duty under USMCA once regional-value rules are met.

Immersion-cooling integrators – Dielectric-fluid bladders pre-filled in a Monterrey FTZ, shipped as North-American sub-assemblies to bypass 50 % fluid duties.

Disassembly–reassembly loops at Otay Mesa and Santa Teresa keep line-item customs values under escalation thresholds.

Arizona–Sonora 1.5 GW AI corridor – Twin substations on each side of the border hedge U.S. power-price volatility while leveraging Mexico’s faster 230 kV permitting.

Latent Capacity — The Invisible Compute Reserve

Major hyperscalers now keep 5 – 10 % of total megawatt capacity off public books, often inside legacy shells with “lights-half-out” aisles masking dense liquid-cooled rows.

Prefabricated container clusters (2 – 4 MW each) sit warehoused in Reno and Laredo, ready to roll within 30 days.

Industry trackers therefore under-state real AI FLOPs by double-digit percentages.

“Filing under industrial R&D rather than ‘data center’ classifications sidesteps energy-use disclosures, unlocking faster permitting and advantageous tax treatments.”

Case Study — Texas Triangle Quiet Build

“Quiet builds beat public roadmaps by months.”

Investment Implications

Areas of Outperformance

Adaptive Supply-Chain Orchestrators: Companies that can reliably secure and guarantee GPU delivery within 120 days are set to dominate, as speed directly translates into competitive advantage in deploying AI infrastructure.

Cross-Border Infrastructure Developers: Specialists adept at navigating the complexities of USMCA-qualified campuses, leveraging tariff exemptions, and efficiently operating along strategic corridors, such as the Arizona–Sonora AI corridor, will outperform their peers.

Reg-Tech Advisers: Boutique firms with a deep understanding of multi-state regulatory frameworks and R&D exemptions can significantly reduce friction for operators, accelerating timelines and unlocking significant tax and operational advantages.

Prefabricated-Module Suppliers: Vendors providing modular, scalable solutions such as Vertiv-class MegaMod units (ranging from 0.5 MW to 1 MW, expandable to 2 MW+ in liquid-cooled deployments) offer crucial flexibility and rapid deployment, positioning them as strategic partners in agile infrastructure rollouts.

Complex-Entity Service Providers: Trust and corporate-secretary firms specializing in creating and managing multi-layered, multi-jurisdictional entities enhance confidentiality and strategic agility, critical to gaining first-mover advantages in the competitive AI infrastructure market.

“Operators are warehousing compute like weapons-grade assets.”

Risk Flags

Retroactive Regulatory Enforcement: Operators leveraging R&D designations and industrial classifications for permitting and tax benefits face risks if regulatory bodies redefine classifications, potentially triggering costly retroactive compliance measures.

Transparency and ESG Pressure: Increasing demands from Limited Partners (LPs) and ESG committees for detailed Scope-2 energy use disclosures could erode the operational flexibility enjoyed by stealth deployments, potentially slowing or complicating expansion plans.

Regional Over-Concentration: High-density deployments in specific regions (notably Texas, northern Mexico, and the Wyoming-Idaho corridor) expose operators to heightened risk from localized policy shifts, climatic events, or grid instability.

Governance Complexity: The ‘labyrinthine’ ownership structures and multi-tier LLC stacks designed for confidentiality and regulatory navigation may inadvertently introduce governance inefficiencies, opacity, and internal compliance friction, potentially impacting operational responsiveness.

“While public attention remains fixated on algorithmic advancements, token economies, and digital infrastructure, it is the unseen operational agility that will dictate true competitive advantage.”

Conclusion — The Discreet Deployment Edge

Throughout this three-part series, we've illustrated that strategic mastery over physical infrastructure encompassing power procurement, advanced cooling solutions, and discreet deployment tactics is the critical differentiator driving the next wave of AI innovation. While public attention remains fixated on algorithmic advancements, token economies, and digital infrastructure, it is the unseen operational agility that will dictate true competitive advantage.

Organizations adept at navigating confidentiality constraints, leveraging adaptive inventory networks, and exercising permitting agility will consistently secure crucial first-mover positions. This discreet deployment capability, operating largely beneath the industry's radar, will enable savvy operators to capture prime locations, shorten deployment timelines, and command the lion’s share of emerging AI infrastructure alpha before competitors even become aware of the opportunity.

For investors and stakeholders, recognizing and positioning for these quiet operational strategies will be essential for capturing long-term value in the rapidly evolving AI infrastructure landscape.

This analysis is based on Persevera AI's AI DataCenter Signals Intelligence service, which provides investment firms and other AI Infrastructure stakeholders with detailed analysis of emerging trends, hidden opportunities, and strategic risks in AI infrastructure. Our intelligence reveals critical opportunities and risks hidden from standard market analysis, giving investors the asymmetric information advantage needed to identify emerging trends, make superior investment decisions, and generate alpha in the rapidly evolving AI infrastructure landscape.

To request the full intelligence package, including our complete analysis of power infrastructure trends, cooling technology bottlenecks, and stealth deployment strategies, visit https://persevera.ai/ai-datacenter-signals-intelligence or contact our team directly.