Beyond the Societal Backlash: Reframing the AI Datacenter Debate

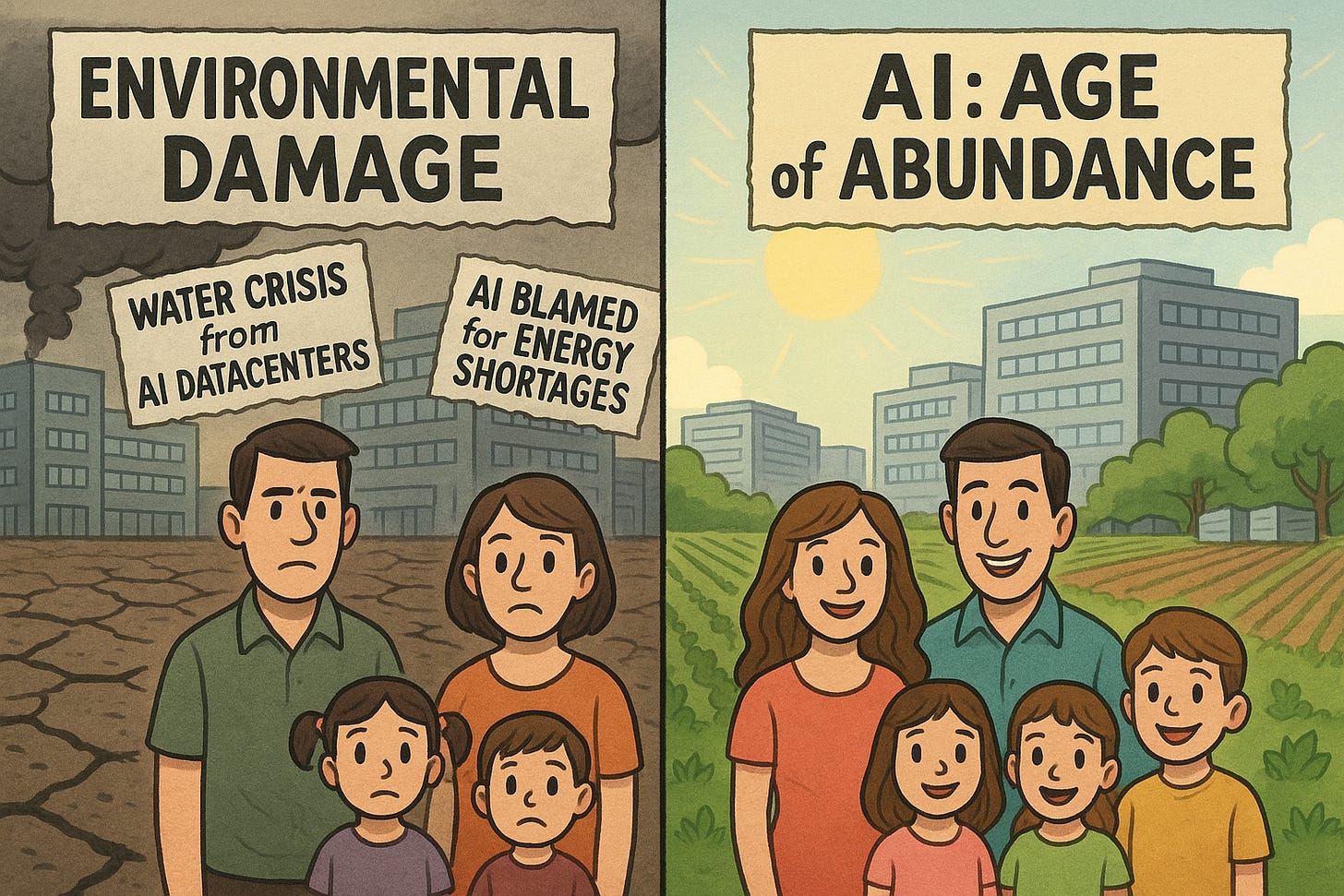

Two Futures, One Choice: Whether AI Datacenters Deepen the Strain or Drive the Fix

The recent flood of stories painting AI datacenters as agents of crisis—exemplified by the latest NYT hit piece, ‘From Mexico to Ireland, Fury Mounts Over a Global A.I. Frenzy‘ — the kind that dominates headlines and splashes somber images across the page, reveals a troubling instinct in how we narrate innovation. AI clearly is undergoing a PR crisis.

These pieces often reach for drama with stark photos of blackouts, cracked fields, and solemn animals, stitched together with loaded language that casts transformative infrastructure as an easy villain.

But this style of coverage simplifies what’s really happening. Complex, decades-old challenges rooted in neglected utilities, bad policy, and economic stress get collapsed into the arrival of new technology, as if innovation itself bears sole responsibility for the hardship. Where nuance is needed, we get blame; where analysis might help, we get outrage. It’s easier to condemn what’s new than confront the slow grind of systemic failure that made these regions vulnerable in the first place.

AI ‘hit pieces‘ in the media often reach for drama with stark photos of blackouts, cracked fields, and solemn animals, stitched together with loaded language that casts transformative technology as an easy villain

Lost in the noise is the basic truth - AI datacenters are also the engines for the kind of solutions the world desperately needs from accelerating medical breakthroughs to smarter resource management. As we wrestle with the big problems of our era, it’s not enough to frame the future as threat. We risk missing the very tools with the greatest potential to turn things around.

The Pre-Existing Crisis Nobody Mentions

When communities in Querétaro, Mexico or western Ireland experience power outages and water shortages, AI datacenters make convenient scapegoats. Yet the evidence tells a more complex story. Ireland’s electrical grid has struggled with capacity constraints since the early 2010s, long before the current AI boom, due to decades of underinvestment in transmission infrastructure and heavy reliance on imported energy. Mexico’s Querétaro region has faced chronic blackouts since the 2000s stemming from an overextended national grid, aging infrastructure, and rapid industrial growth that predates any significant tech investment.

Water scarcity in these regions similarly reflects structural problems: agricultural overuse consuming 70% of Mexico’s water supplies, seasonal droughts in Ireland’s urban centers, and municipal systems designed for populations half their current size. These are systemic failures of planning, investment, and governance accumulated over generations.

AI datacenters have undoubtedly intensified these pressures adding 10-30% to local resource demands in some areas, but they did not create them. The distinction matters profoundly.

Blaming datacenters for infrastructure collapse is like blaming the final passenger for a bridge collapse when the structure was already crumbling. It absolves decades of policy failure while potentially blocking solutions.

The Innovation Revolution Hidden in Plain Sight

What coverage of AI datacenters consistently underplays is the unprecedented pace of efficiency innovation they’ve driven in the matter of a few years compared to decades.

Modern AI facilities achieve Power Usage Effectiveness (PUE) ratios of 1.1 (10% overhead power consumption beyond computing load) compared to 1.5-2.0 in legacy systems, representing 40% energy savings through AI-optimized airflow and dynamic power management.

Cooling technologies have advanced more in the past 3 years than in the previous two decades. Direct-to-chip liquid cooling systems now handle 120 kW racks while consuming 30-50% less energy than air-based methods, with immersion cooling deployed in 40% of new builds enabling waste heat capture for urban heating systems. Google’s DeepMind AI reduced datacenter cooling energy by 40% through predictive optimization an innovation that retrofits to non-AI facilities, lowering industry-wide consumption.

Water recycling has evolved from aspirational to standard, with closed-loop systems achieving 95% reuse rates and cutting freshwater consumption by 20% industry-wide since 2020. These breakthroughs stem directly from AI workloads’ density demands; without this forcing function, such rapid iteration would likely not have occurred.

The Double Standard of Digital Infrastructure

The contrast with the social media and cloud computing boom of the 2010s is striking and revealing. That era expanded datacenters 10x, eventually consuming 1.5% of global electricity, yet faced minimal “villain” framing despite comparable resource scaling.

Amazon, Google, and Facebook built massive facilities with PUEs above 1.8 and substantial water footprints, but public discourse emphasized connectivity benefits and digital transformation.

We should demand better, but we should also recognize that AI infrastructure already operates under far stricter standards than the digital systems we take for granted.

Why the difference? Partly, AI faces accelerated growth, 3x faster expansion than cloud. But the fundamental shift is rhetorical. The 2010s cloud build occurred before climate awareness reached critical mass and ESG mandates became standard. Today’s AI datacenters integrate renewables from inception, target carbon neutrality, and face rigorous environmental review that their cloud-era predecessors avoided.

This double standard reveals less about AI’s unique harm than about evolving societal expectations. We should demand better, but we should also recognize that AI infrastructure already operates under far stricter standards than the digital systems we take for granted.

Causation, Correlation, and the Attribution Challenge

The question of how much AI datacenters directly cause versus correlate with resource issues deserves careful examination.

In Ireland, datacenters consume 20% of national electricity, up from 5% in 2015, directly contributing to peak-hour shortfalls that trigger load-shedding. In Mexico’s Querétaro, Microsoft’s 12.6 MW facility adds measurable demand to a grid operating at 90% capacity pre-expansion, correlating with 30% increased blackout frequency.

Yet “correlation” does not equal sole causation. These facilities prioritize uninterruptible power during outages, potentially extending disruptions for other users, but they also pay premium rates that fund grid upgrades benefiting everyone. They consume millions of gallons for cooling, depleting aquifers, but also invest in recharge projects and wastewater recycling that improve regional water management beyond datacenter needs.

Companies like Microsoft claim minimal “direct link” to community problems, citing efficiency measures—claims that third-party audits sometimes contest but that also reflect genuine mitigation efforts.

The reality sits between extremes: datacenters measurably worsen pre-existing strains while simultaneously introducing capital and technology to address root causes that governments and utilities failed to fix for decades.

The Workload Reality Check

A crucial but often overlooked fact: only about 33% of current datacenter capacity globally serves AI workloads, with projections of 70% by 2030. This means the majority of resource consumption in facilities criticized as “AI datacenters” actually supports traditional cloud services, streaming, social media, and enterprise applications that face far less scrutiny.

In hyperscale facilities in Ireland and Mexico, AI/ML optimistically account for 30-40% of compute loads, with the remainder serving conventional digital services we all depend on daily. Attributing all infrastructure impact to AI misrepresents the situation and obscures accountability for the full digital ecosystem.

Majority of resource consumption in facilities criticized as “AI datacenters” actually supports traditional cloud services, streaming, social media, and enterprise applications that face far less scrutiny.

The Transformative Potential We Risk Losing

The stakes of this debate extend far beyond infrastructure politics. AI datacenters enable breakthrough applications with civilization-scale implications.

Here are some specific breakthroughs attributed to large language models (LLMs) and advanced reasoning AI from the last six months (mid-2025) all of which point towards a tipping point where AI’s advance scientific discovery at an unprecedented pace.

AlphaEvolve: Math & Chip Design

DeepMind’s AlphaEvolve, a reasoning-augmented LLM, discovered new solutions to open mathematics problems and optimized the design of next-generation AI chips (tensor processing units), saving Google over 0.7% of global compute resources which represents a multi-million dollar impact.

Math Olympiad — Gold Medal Performance by LLMs

In July 2025, OpenAI’s new GPT-5 saturated the hardest International Math Olympiad benchmarks, achieving gold-level scores with advanced “chain-of-thought” and reinforcement learning (RL) reasoning. This marks the first time an AI system performed at or above medalist-level in global math competitions. This has profound implications for scientific research and breakthroughs.

Medical Reasoning Surpassing Human Average

Reasoning-based LLMs have been tested on medical social skill benchmarks, surpassing average human performance in simulated clinical scenarios. These LLMs now diagnose, summarize, and provide empathetic responses as well as (or better than) typical medical students.

MIT’s Test-Time Training: Real Complex Reasoning Gains

MIT’s July 2025 breakthrough showed that temporarily allowing LLMs to “learn” during prediction dramatically boosts their accuracy for novel, complex reasoning tasks (e.g., IQ-style and multi-step clinical puzzles), up to 6x improvement over previous models.

Scientific Discovery: Accelerating Astronomical Event Detection

Oxford and Google demonstrated that new general-purpose LLMs can accurately detect and classify astronomical events (like supernovae) from tiny data sets, improving telescope science and real-time cosmic monitoring.

Empirical Software & Agentic Reasoning in Science

Google Research’s LLM-powered empirical software tools produced expert-level results on challenging scientific coding problems, enabling scientists to prototype and optimize experiments far faster than before.

Solutions Over Stoppage

The path forward requires moving beyond vilification toward practical mitigation:

Mandate renewable integration: Hyperscalers can achieve 100% renewable energy by 2030, cutting emissions 50% while stabilizing grids through demand flexibility. Policy frameworks like the EU’s AI Act could enforce this, making clean energy infrastructure a prerequisite for permits, which is not the case currently.

Deploy advanced cooling universally: Immersion cooling, direct-to-chip liquid systems, and air-side economizers can cut water consumption 95% while capturing waste heat for district heating. Requiring these technologies as licensing conditions ensures best practices become standard.

Invest in grid modernization: Datacenter tax revenues should fund transmission upgrades, energy storage, and smart grid technology benefiting all users. Ireland’s €4.3 billion in datacenter economic contributions could modernize national infrastructure if directed appropriately.

Implement transparent monitoring: Real-time public dashboards showing datacenter resource use, efficiency metrics, and community impact would enable accountability while dispelling misinformation. Opacity fuels backlash; transparency builds trust.

Co-locate with renewables: Building datacenters near solar, wind, or geothermal sources, with on-site generation and storage, makes them grid-positive rather than grid-dependent. Projects like UAE’s solar-powered facilities demonstrate viability.

Prioritize brownfield redevelopment: Retrofitting existing industrial sites rather than greenfield expansion minimizes land use and leverages existing infrastructure, as retrofit-first advocates increasingly recommend.

A Question of Narrative and Framing

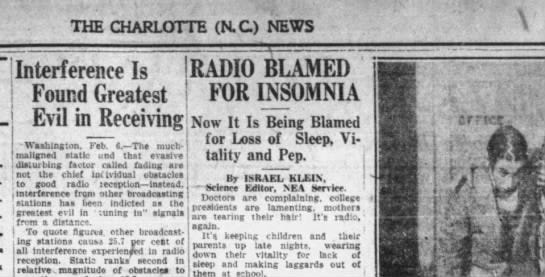

We’ve been villainizing new technologies for hundreds of years and this backlash against AI datacenters is only the latest chapter in a long tradition.

One my favourite sites ‘The Pessimists Archive’ documents how successive waves of innovation from the bicycle to the radio, photography, typewriters, and the internet were met with moral panic, technophobia, and fierce resistance, nearly identical to the fears targeting AI and datacenter infrastructure today.

Historical Technophobia Highlights

Radio in the 1920s-1940s: Headlines warned it would “ruin culture,” corrupt youth, and erode social skills fears that echo current worries about tech overload.

Photography and “fake photos” (1912): The same anxieties about “deepfakes” and image manipulation gripped society over a century ago.

Typewriter (1898): Critics said it would “decay letter-writing” and destroy the romance of personal correspondence.

Bicycle, Electricity, Comics, Calculators, the Internet: All spawned fears of moral decay, social unrest, or cognitive decline.

The history is clear. Every transformative technology is initially framed as a social or environmental threat, only to be later recognized as a catalyst for progress.

Today’s backlash against AI datacenters is not unprecedented, it is the default reaction to change, often channeling broader frustrations with systemic problems onto whatever is new and powerful.

Every transformative technology is initially framed as a social or environmental threat, only to be later recognized as infrastructure for progress.

Conclusion: Innovation, Not Villainization

AI datacenters are not unique villains but are the latest subject in a recurring societal script. We cannot afford to repeat the mistake of confusing mitigation with obstruction. History shows that managed innovation, not technophobia, is the way forward: demanding real accountability while still harnessing the benefits new infrastructure enables for everyone.

We should recognize that the same AI running in these datacenters could optimize resource use across entire economies, making their own consumption negligible by comparison.

AI datacenters should be understood not as villains exploiting vulnerable communities, but as revealing infrastructure deficits that governments failed to address for generations. They intensify problems but also bring capital, technology, and urgency that could finally solve them.

Rather than stopping development, we should demand better development: 100% renewable power, closed-loop water systems, transparent monitoring, community benefit agreements, and grid investment that serves everyone. We should recognize that the same AI running in these datacenters could optimize resource use across entire economies, making their own consumption negligible by comparison.

Most importantly, we should ask whether critical coverage adequately balances costs against benefits, and whether the narrative serves truth or merely channels frustration at complex systemic failures onto convenient targets. AI datacenters aren’t perfect, but they’re also not the enemy.

The choice isn’t between AI datacenters and pristine environments. It’s between managed, efficient, accountable development that funds infrastructure fixes while enabling transformative applications, or unmanaged development elsewhere combined with continued neglect of underlying problems. When framed honestly, the path forward becomes clear.

This article comes at the perfect time. How do we shift the narrative effectively? You're so spot on, this really resenated.