Issue #13 - OpenAI vs. The New York Times: A Copyright Turning Point in the AI Era

Exploring GenAI & LLM's Copyright Landscape; The OpenAI-NYT Lawsuit;Embracing Open Source Innovation; Harnessing Data Marketplaces; Learning from Industry Pioneers; Strategies for Enterprise Evolution

The debate around Generative AI and Large Language Models (LLMs), and copyright infringement is intensifying, especially with the recent lawsuit between OpenAI and The New York Times (NYT). This case has brought to the forefront the complexities of copyright law in the AI era, as the NYT alleges that OpenAI's GPT-3 was trained on a dataset that included its copyrighted articles.

“I take a contrarian view on this whole issue of copyright with respect to Generative AI and LLMs. In my view enterprises should not view these copyright challenges merely as obstacles but as opportunities for innovation and competitive advantage. The open source movement, which revolutionised the software industry, serves as a precedent for how a similar paradigm shift could occur with Generative AI and LLMs.”

By embracing a forward-thinking approach, enterprises can navigate copyright complexities and potentially create new business models and revenue streams. This could involve establishing data marketplaces for monetising non-sensitive data assets or LLM marketplaces for sharing, trading, or licensing models and datasets.

In this newsletter, we will explore the intricacies of copyright issues in Generative AI and LLMs, drawing parallels with the open source revolution. We will examine the OpenAI vs. NYT lawsuit as a case study that underscores the urgency of revisiting copyright laws.

The goal is to inspire enterprises to view copyright not as a hurdle but as a catalyst for innovation, paving the way for new forms of content creation and distribution in the AI space.

Understanding Copyright Issues in Generative AI and LLMs

Navigating the intersection of copyright law and AI-generated content is a complex task. Traditional copyright law, designed to safeguard human creators and their original works of authorship, is being challenged by the advent of Generative AI and LLMs. These models, capable of generating human-like text, images and audio blur the lines of authorship and prompt new questions about the applicability of existing copyright laws.

A central debate in this context is the distinction between the extraction of ideas and the copying of expression. Copyright law traditionally protects the expression of ideas, not the ideas themselves, a principle known as the ‘idea-expression distinction’. However, its application to Generative AI and LLMs is not straightforward.

LLMs are non-deterministic models, meaning they generate outputs based on probability rather than fixed rules. When an LLM is trained on a dataset, it learns patterns and structures from the data but does not replicate the data verbatim. Instead, it generates new content based on what it has learned, in a given context. This process can be seen as akin to extracting ideas or concepts from the data, rather than copying the expression of those ideas. If this perspective is accepted, it could potentially redefine the copyright issue, suggesting that LLMs are not infringing on copyright but rather extracting and learning from the ideas present in the data.

“In essence, LLMs are designed as creators of ideas, not as generators of plagiarised text”.

Another legal concept that could potentially apply to Generative AI and LLMs is the ‘fair use’ defense. Fair use is a legal doctrine that allows limited use of copyrighted material without acquiring permission from the rights holders. It is evaluated on a case-by-case basis, considering factors such as the purpose and character of the use, the nature of the copyrighted work, the amount and substantiality of the portion used, and the effect of the use on the market for the original work.

In the context of AI and LLMs, one could argue that the use of copyrighted material in training these models is transformative, meaning it adds something new or changes the original work in a significant way. If deemed transformative, the use of copyrighted material could potentially be protected under the fair use doctrine. However, this argument is still being debated and has not been definitively settled in court.

Lastly, the concept of "post-plagiarism" has been introduced in the AI discourse. This concept views the text generated by AI apps as a hybrid of human and AI authorship, where humans and technology co-write text. It challenges traditional notions of authorship and plagiarism and could potentially influence how copyright laws are interpreted and applied to AI-generated content. In my opinion, this is the strongest argument in favour of Generative AI & LLMs.

In conclusion, while the copyright issues surrounding Generative AI and LLMs are complex and still being resolved, there are compelling arguments for enterprises to consider. These arguments could potentially redefine the traditional understanding of copyright and open up new avenues for innovation and competitive advantage.

OpenAI and The New York Times Lawsuit: A Different Perspective

The ongoing lawsuit between OpenAI and The New York Times (NYT) is a significant case in the intersection of AI and copyright law. The NYT alleges that OpenAI's language model GPT-3 was trained on a dataset that included its articles, constituting copyright infringement. OpenAI counters that its use of the data falls under the fair use doctrine, arguing that it is transformative and does not harm the market for the original work.

In my opinion, the lawsuit has broader implications, highlighting the struggle of traditional media to maintain control over its content in the face of rapidly advancing AI technology. The NYT, like many other traditional media outlets, is grappling with the reality that its content is being used to train AI models without direct compensation. So is this lawsuit about copyright or compensation?

While copyright can lead to compensation in certain scenarios, they are not inherently tied together. The primary purpose of copyright is to protect the rights of creators over their work, and compensation is a potential outcome of that protection, not its main goal. While these outlets may seek compensation for the use of their content, it's not necessarily a straightforward copyright issue as the use of such content by AI could potentially fall under fair use as discussed earlier, and so compensation does not apply.

As a writer of a paywall-protected newsletter, I would not object to AI training on my content, as long as it's not regurgitated word for word. This perspective supports the notion that AI is not infringing on copyright but rather extracting and learning from the ideas present in the data.

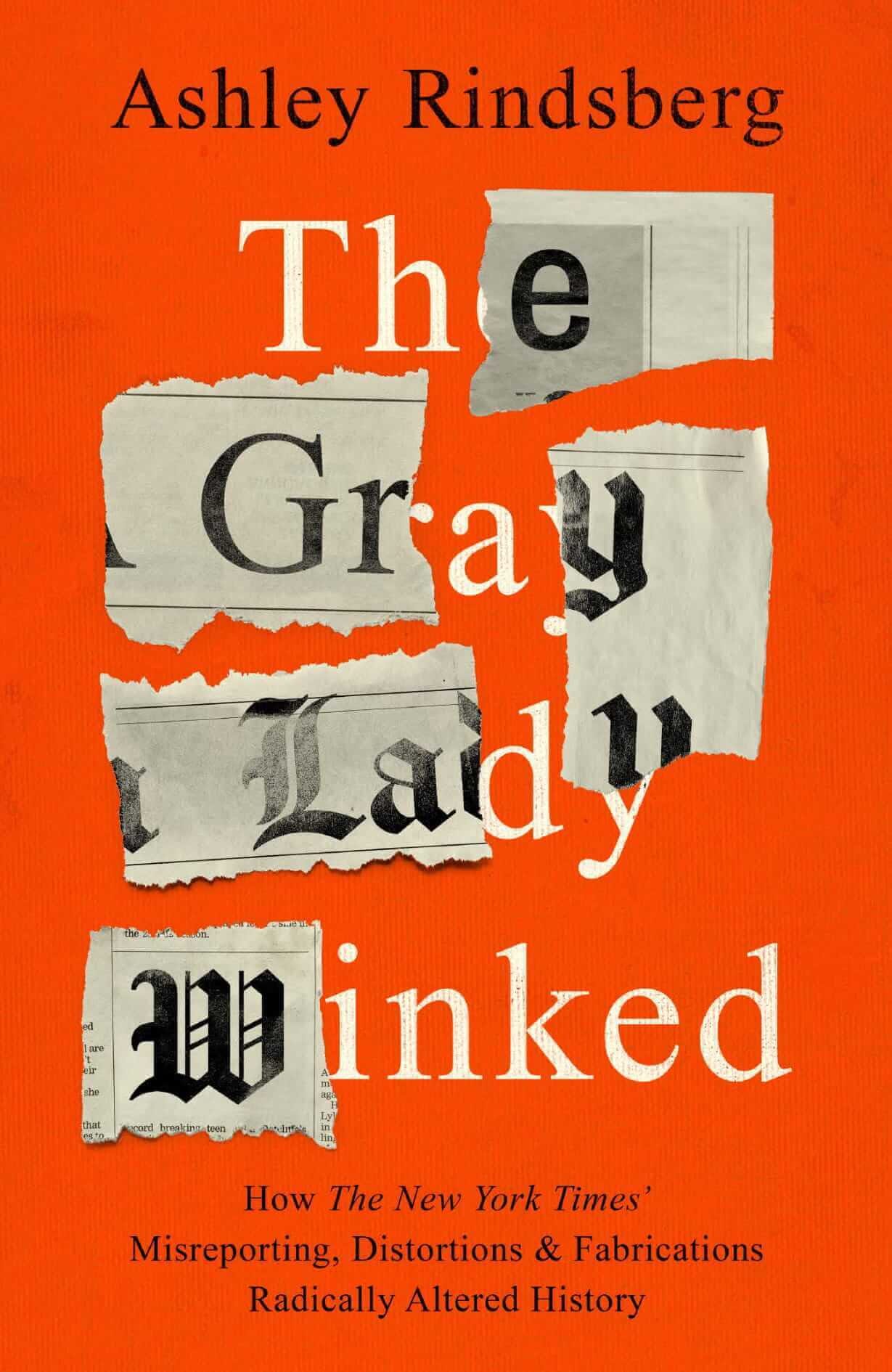

The NYT has itself been accused of plagiarism in the past. Former NYT executive editor Jill Abramson was accused of plagiarising portions of her book "Merchants of Truth," with allegations that some passages were lifted from other sources without proper attribution. Additionally, the NYT admitted in 2003 that reporter Jayson Blair had committed acts of plagiarism and fabrication. The NYT has also been accused of bias in its coverage. For example, over 200 contributors sent a letter to the Times expressing serious concerns about editorial bias in its coverage of transgender issues. In addition to these specific instances, the NYT has been critiqued more broadly for its reporting practices. Ashley Rindsberg's book, "The Gray Lady Winked," provides a comprehensive critique of the NYT's journalistic failures, arguing that these failures have had significant impacts on the course of history. The book suggests that the NYT's reporting has been influenced by ideology, ego, power, and politics, sometimes at the expense of presenting the facts accurately and objectively.

These issues of plagiarism, bias and misreporting could potentially impact the validity of the NYT's own copyright claims in its lawsuit against OpenAI. If the NYT's content is found to have been plagiarised or biased, it could weaken the newspaper's argument that its content is original and protected by copyright. However, the legal implications of these accusations would ultimately be determined by the court.

This lawsuit prompts a broader discussion about the use of internet content in training AI. If all content on the internet is considered fair use for AI training, it could radically change our understanding of intellectual property rights. As AI continues to grow and push the boundaries of the traditional copyright system, we need to rethink our legal and ethical rules.

The case between OpenAI and The NYT is a clear indication that the tide is turning, and it's time for us to adapt and redefine our understanding of copyright in the age of Generative AI and LLMs.

The Open Source Revolution: A Precedent for Innovation

The open source movement, was until very recently viewed with skepticism, has revolutionised the software industry and serves as a compelling precedent when considering the potential of Generative AI and LLMs.

In the early days of software development, the idea of freely sharing source code was met with resistance. Proprietary software, with its closed-source code, was the norm. Critics argued that open source would lead to chaos, with too many cooks in the kitchen, and that it would undermine the commercial viability of software development. However, the open source movement has proven these skeptics wrong.

Open source software has fostered unprecedented levels of collaboration, innovation, and rapid development. By making source code freely available, it has enabled developers worldwide to contribute to projects, fix bugs, and add features, accelerating the pace of development and improving the quality of software. It has also spurred innovation by allowing developers to build upon existing code rather than starting from scratch.

Companies like Google, Facebook, and IBM have embraced open source, contributing to and benefiting from this collaborative ecosystem. The success of the open source movement has also debunked the myth that it undermines commercial viability. Many businesses have built successful models around open source software, offering premium features, professional services, or enterprise versions of their software for a fee. We shall look into some of these case studies later in the newsletter.

Drawing parallels with the current caution around Generative AI and LLMs, we can see similar fears and skepticism. Concerns about copyright infringement, quality control, and commercial viability are prevalent. However, just as the open source movement turned these challenges into opportunities, a similar approach could be adopted for Generative AI and LLMs.

Opportunities for Enterprises

Enterprises across various sectors have a unique opportunity to leverage their non-sensitive data assets and participate in the creation of LLM marketplaces. This approach not only provides a new avenue for monetisation but also fosters a collaborative environment that can accelerate innovation and provide a competitive edge.

Monetising Non-Sensitive Data

Enterprises generate and collect vast amounts of data as part of their operations. While some of this data is sensitive or confidential, a significant portion is non-sensitive and can be used to train LLMs or shared with other entities. This non-sensitive data can take various forms depending on the industry:

Financial Sector

Market research reports: Summaries of market research that do not contain proprietary or confidential data.

Publicly available financial statements: Financial disclosures of publicly traded companies that are required by law to be made public.

De-identified consumer spending trends: Aggregated data on consumer spending that does not identify individual consumers.

Healthcare Sector

De-identified clinical trial results: Summaries of clinical trial outcomes where all patient identifiers have been removed.

Healthcare facility statistics: Data on hospital admission rates, average length of stay, etc., that do not include patient-specific information.

Aggregate health outcomes: Data on the effectiveness of certain treatments across a population without individual patient data.

Technology Sector

Product manuals: Instructions and user guides for products that have been released to the public.

Release notes: Information on software updates and bug fixes that are shared with users to inform them about changes and improvements.

Open-source code: Software code that is made publicly available and can be used and modified by anyone.

Creative Sector

Public Relations Materials: Press releases, media kits, and other PR materials that are intended for public distribution can be used as non-sensitive data.

Brand Guidelines: Publicly available brand guidelines or style guides can be used as non-sensitive data, as they do not typically contain proprietary information.

Advertising Analytics: Aggregated data on ad performance, such as click-through rates, impressions, or conversion rates, can be used as long as they do not reveal personal information about individual users

Education Sector

Course descriptions: Information about the content of educational courses that is typically available in public catalogs or brochures.

Institutional statistics: Data on enrolment numbers, graduation rates, and other institutional metrics that do not disclose individual student information.

Public Sector

Legislation and regulatory texts: Laws and regulations that have been enacted and are publicly accessible.

Demographic data: Aggregated data on population characteristics sourced from censuses or surveys that do not identify individuals.

By monetising this non-sensitive data, enterprises can create a new revenue stream while contributing to the broader AI ecosystem. However, it's crucial to ensure that any data sharing complies with privacy regulations and does not infringe on any copyrights.

LLM Data Marketplaces

The concept of LLM data marketplaces takes this idea a step further. In these marketplaces, enterprises can sell, trade, or license their non-sensitive data or the LLMs they have trained. This not only provides an additional revenue stream but also fosters a collaborative environment where businesses, researchers, and developers can build upon each other's work, accelerating the pace of innovation in the field of AI.

The idea of LLM data marketplaces might seem at odds with the ethos of the open source movement, which emphasises free access and collaboration. However, these two concepts can coexist and even complement each other.

Open source software has shown that free access to certain resources can spur innovation and collaboration. Similarly, making certain non-sensitive data for training LLMs freely available could accelerate the development of AI technologies and applications.

At the same time, commercial interests can drive investment and further innovation. Enterprises can offer premium data for training LLMs for a fee, similar to how many open source companies offer paid versions of their software with additional features or services. This can provide the funding needed to sustain the development and maintenance of free resources, creating a virtuous cycle that benefits all participants.

Case Studies - Enterprises That Have Successfully Turned Copyright Into An Asset

The intersection of copyright and technology has been a complex terrain for many companies. However, several have successfully navigated these challenges to innovate and create new revenue streams. Here are a few notable examples:

Google and Fair Use

Google's handling of copyright issues in its Google Books and Android projects serves as a prime example of innovation amidst copyright complexities. In Google Books, the company digitised millions of books and made the text searchable, arguing that this constituted fair use. After a decade-long legal battle, the courts ruled in favour of Google, setting a precedent for transformative use of copyrighted material. Similarly, in the Android project, Google used Java APIs, leading to a legal battle with Oracle. The U.S. Supreme Court ruled that Google's use was a fair use, setting another important precedent in the tech industry.

IBM and Weather Data

IBM's acquisition of The Weather Company is another example of a company leveraging data to enhance its AI offerings. While weather data itself isn't copyrighted, the way it is presented and used can raise copyright questions. IBM has managed to use this data to feed its AI models without infringing on copyrights, demonstrating how companies can leverage data assets legally.

Adobe and Creative Commons Integration

Adobe, known for its creative software suites, has integrated Creative Commons licensing directly into some of its products. This allows users to easily license their creations and use others' work that has been shared under Creative Commons, fostering a culture of sharing and collaboration while respecting copyright.

Getty Images and Embedded Viewer

Getty Images, a world leader in visual communications, faced the issue of widespread unauthorised use of its images. Instead of solely pursuing legal action, Getty provided an "Embedded Viewer" feature that allows free use of many of its images for non-commercial purposes, as long as they are embedded directly from Getty's website. This approach has helped to control the use of their copyrighted material while broadening their audience and potential customer base.

Red Hat and Open Source Business Model

Red Hat has built a successful business around open source software, particularly its Red Hat Enterprise Linux (RHEL) platform. While the software itself is open source, Red Hat sells subscriptions that provide customers with additional services such as support, training, and integration. This model has allowed Red Hat to profit from open source software while contributing to its development.

While some have criticised Red Hat's recent decisions, such as the handling of RHEL source code, the company maintains that their actions are in line with open source licenses and rights, and they continue to contribute upstream. Despite recent controversies, Red Hat's business model remains a testament to the viability of commercialising open source software.

Data Marketplaces

The concept of data marketplaces is still relatively new, but some companies have started to explore this avenue. This model could potentially be extended to non-sensitive data for training LLMs.

Dawex, a global data marketplace, allows companies to source, monetise, and exchange data securely and in full compliance with data protection regulations.

Blockchain-based data marketplaces like Ocean Protocol and Datum offer a secure, transparent, and decentralised platform for data transactions. They use smart contracts to ensure that data transactions are transparent and secure, and they provide a level of control and privacy that can be particularly beneficial in the context of data privacy and copyright concerns.

dClimate is another decentralised network for climate data, leveraging blockchain technology to provide a secure and transparent marketplace where businesses, farmers, and researchers can access reliable climate data and forecasts.

Key Takeaways for Enterprise Decision Makers

As we navigate the era of AI and LLMs, the traditional concept of copyright is under re-evaluation. This shift presents a unique opportunity for enterprises to innovate and extract value from their non-sensitive data assets. Here are the key strategic takeaways for enterprise leaders:

Embrace the Shift in Copyright Perception: The advent of Generative AI & LLMs challenge the traditional concept of copyright, which was designed to protect the expression of ideas. In the Generative AI and LLM era, the focus is shifting towards the creation and application of ideas. Enterprises should embrace this shift, recognising that the true value lies in innovation and the application of ideas, not in the rigid control of their expression.

Foster a Culture of Innovation: Encourage a culture within your organisation that views technological advancements as opportunities for growth. This involves being open to experimenting with new Generative AI and LLM applications and business models that could potentially disrupt traditional practices.

Monetise Non-Sensitive Data Assets: Enterprises should establish frameworks that allow for the ethical and legal use of non-sensitive data. This includes participating in or creating data marketplaces that enable the monetisation of non-sensitive data, which can be used to train Generative AI and LLM models and drive innovation. By doing so, businesses can unlock new revenue streams while contributing to the advancement of AI technologies.

Stay Vigilant About Legal Issues: Despite the changing perception of copyright, vigilance regarding legal issues remains paramount. Enterprises must stay informed about evolving copyright laws and seek expert advice to navigate this changing landscape. The outcome of the OpenAI NYT lawsuit will be critical, however I still think this is bigger than NYT and OpenAI, and a re-evaluation of copyright is already underway.

Engage in Industry Discussions and Policy-Making: By being proactive and engaging in industry discussions and policy-making processes, businesses can help shape a future where copyright law aligns with the realities of Generative AI and LLMs, and supports rather than hinders innovation.

Lead the Charge: The tide is indeed changing, and enterprise leaders have the opportunity to lead the charge by fostering a culture that values the sharing and growth of ideas. By doing so, they can position their organisations at the forefront of the Generative AI and LLM revolution, ready to harness its transformative potential.

Call to Action

We are in an era where open source software has become a cornerstone of technological innovation, demonstrating that free access to certain resources can spur creativity and collaboration.

The ongoing lawsuit between The New York Times and OpenAI is a pivotal moment in the AI and copyright landscape, highlighting the complexities of copyright in the AI era. However, it's important to remember that this lawsuit is just one part of a larger shift in how copyright is viewed in relation to Generative AI and LLMs.

Many companies have successfully navigated the complex terrain of copyright, turning potential challenges into commercial advantages. They have shown us that with strategic thinking and innovation, copyright issues can be transformed into opportunities to create new business models, services, and products that not only comply with the law but also drive industry forward.

As a business leader, you are in a unique position to leverage these changes to drive growth and innovation in your organisation. Reflect on the lessons from these successful companies and consider how you can apply them to your own business strategies. Look at your non-sensitive data assets and think about how they can be monetised in a data marketplace. Stay informed about the evolving legal landscape and seek expert advice to navigate it. Engage in industry discussions and policy-making processes to help shape the future of copyright law in the AI era.

The future of AI is not just about technology, but also about the innovative business models and strategies that you adopt in response to this technology. Embrace the change, seize the opportunity, and lead your business into the future of AI. The tide is already turning, and it's time that enterprises get ahead of this and prepare for a new era.