Issue #7: The OpenAI Saga - Charting a New Course for Enterprises with Aspirational AI

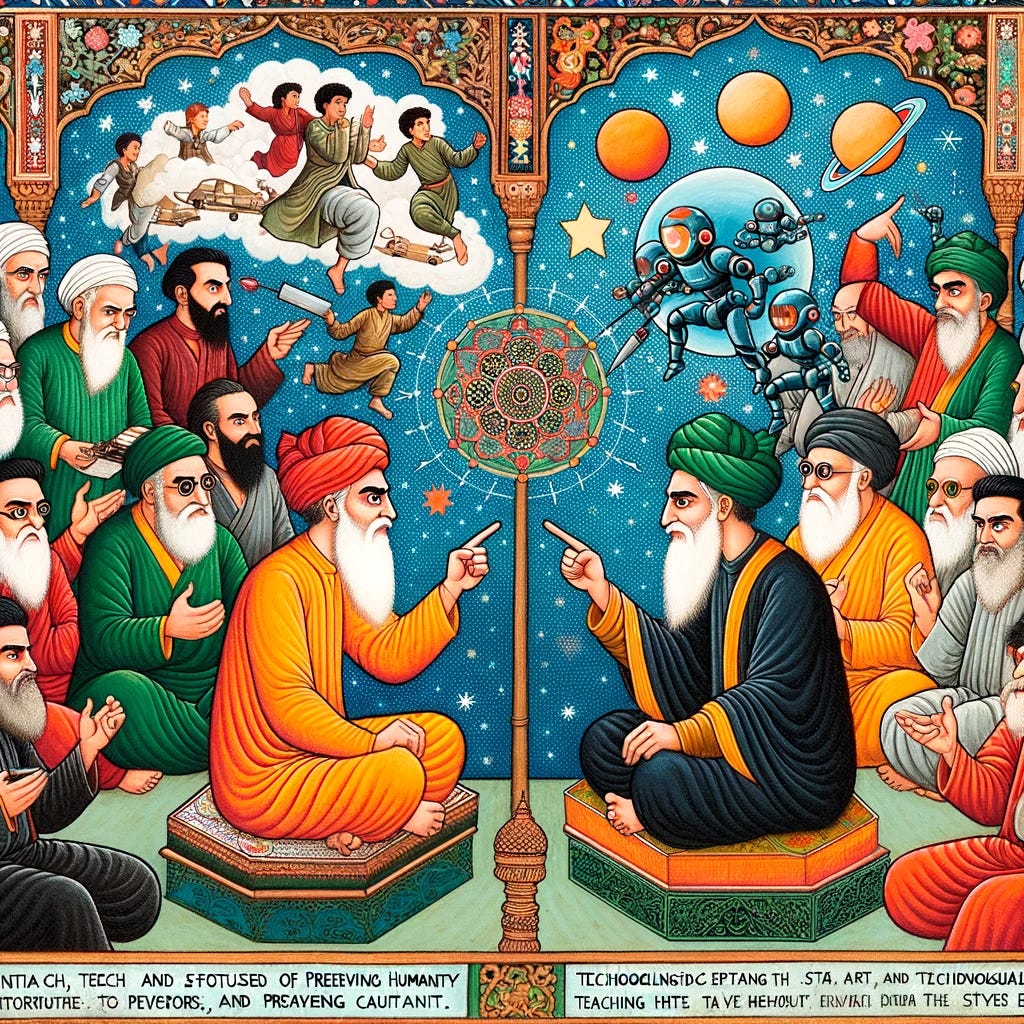

From Responsible AI to Ideological Tides: Navigating the Waters of Effective Altruism and Accelerationism

The past week has been tumultuous for OpenAI and AI progress in general, marked by a series of events that read more like a corporate thriller.

It started with a shocker: Sam Altman, the CEO, was abruptly fired by the OpenAI board on a seemingly regular Friday. The tech world was left reeling with the unexpected turn of events.

Then, in a twist worthy of a Hollywood script, a frenetic four days ensued, filled with intrigue and suspense.

Microsoft CEO Satya Nadella stepped in to save the day and acquire the OpenAI team and have Sam lead it, a move that nearly materialised with jokes of a Microsoft bus being parked outside OpenAI headquarters.

The dust settled on Wednesday this week with a revised board at OpenAI, culminating in the dramatic reinstatement of Sam Altman as CEO.

The whole saga played out on Twitter/X which is fascinating in itself.

The Role of Effective Altruism

Delving into the undercurrents of this saga, one finds the influence of Effective Altruism (EA) at its core.

EA, a philosophical and social movement advocating for using evidence and reason to do the most good, has been instrumental in shaping the AI narrative.

The recent FTX Sam Bankman Fried saga also involved EA, as Sam and most of his close associates had close links to EA.

In the unfolding corporate drama at OpenAI, the role of EA became apparent soon after reports emerged last Friday. Three non-employee board members associated with EA - think tank researcher Helen Toner, Quora CEO Adam D’Angelo, and RAND scientist Tasha McCauley alongside Ilya Sutskever, OpenAI’s Chief Scientist known for his EA sympathies, played critical roles in the decision to oust Sam Altman. Ilya later expressed regret over his involvement in these actions.

This move, speculated to be driven by EA's agenda to delay the advent of Artificial General Intelligence (AGI) for safeguarding humanity, hints at a deeper ideological battle.

The ousting by an EA aligned group becomes even more prescient as there are now indications that a breakthrough around ‘Q learning’ was achieved in the months and weeks in the lead up to the firing of Sam.

This could have been the precursor but the dynamics were played out by philosophical differences and the need to curtail progress.

Implications for the Broader AI Community

In my view, this power struggle at OpenAI isn’t just an isolated boardroom skirmish; it's a microcosm of a larger ideological clash within the AI community.

The incident has thrust the rift between two philosophical camps - the Effective Altruists (EAs) and the Effective Accelerationists (e/accs) - into the mainstream limelight.

On one side, the EA movement, harbours a 'doomerism' mentality, fears the unchecked progression of AI technology.

On the other, the techno-optimists, rallying under the banner of Effective Accelerationism, advocate for embracing AI's transformative potential.

The OpenAI crisis symbolises a turning point, questioning long-held narratives around AI development and its governance.

It challenges the conventional wisdom of "Responsible AI" and "Ethical AI" and opens the door for a more progressive, less fear-driven approach to AI in enterprises.

As the dust settles, one thing is clear: the AI community is at a crossroads, and the path it chooses now will shape not only the future of AI but also the role technology will play in our collective future.

Full Disclosure: My Alignment with Effective Accelerationism

My experience in the climate and environmentalism sector has convinced me that embracing progressive technology is essential to overcoming global challenges. Regrettably, the climate debate is often swayed by degrowth and doomer ideologies, which suggest that curbing economic and technological growth is the only way to prevent environmental decline. This view fails to recognise the potential of innovative solutions, such as nuclear energy, to sustainably address long-term issues. I'm concerned that the impasse experienced with nuclear energy in the 1970s, and the looming climate crisis, could recur with AI if we don't adopt the appropriate narrative. In my view, being aligned to e/acc is the right way forward.

EA vs E/ACC: The Ideological Divide

The EA Philosophy

Effective Altruism (EA) is more than a set of ideas; it's a movement that combines both a philosophical outlook and a practical approach to addressing the world's most pressing issues.

At its heart, EA is about using evidence and reason to determine the most effective ways to benefit others. In the context of AI, this philosophy has manifested in a cautious approach to AI development. EA is much broader than AI but it certainly is their main focus area right now.

Proponents of EA in the AI field argue for stringent checks and balances, emphasising potential risks and advocating for a slowed progression towards Artificial General Intelligence (AGI).

Their concern is primarily rooted in existential risk - the idea that uncontrolled AI development could lead to scenarios that might threaten humanity itself.

This perspective has knowingly or unknowingly influenced policies and decision-making processes in AI enterprises, often leading to conservative, risk-averse strategies in AI development and deployment.

The Impact of EA on AI Development

EA's influence is evident in the widespread adoption of concepts like "Responsible AI" and "Aligned AI" within the tech community.

These concepts often prioritise safety, fairness, and ethical considerations, sometimes at the expense of rapid innovation and bold experimentation.

While these principles aim to safeguard against potential AI-related hazards, I argue that they also hinder the kind of breakthroughs necessary for major technological advancements.

The EA approach, with its focus on mitigating risks and moral considerations, can lead to a form of 'paralysis by analysis,' where the fear of unintended consequences impedes bold steps forward in AI.

This cautionary stance is unprecedented in the history of technological development. Humanity has never before had a widespread call to halt the progress of a technology before realising its potential.

When we enter the realm of theorising over negative possibilities, the search space becomes infinite, leading to an endless cycle of hypotheticals.

For instance, imagine if there had been a movement to slow down the progress on semiconductors because they could be used in precision-guided weaponry, or to ban the Internet due to its potential to enable illegal activities like paedophile rings.

Such a perspective overlooks the fundamental principle that every technology has its pros and cons. By focusing primarily on the negative potentials, we risk ignoring the immense positive impacts these technologies can have.

A more balanced approach would be to amplify the good while using reasonable and targeted actions to curtail the bad.

This way, we can harness the full potential of emerging technologies like AI, while managing their risks in a pragmatic and focused manner.

Introduction to Effective Accelerationism (e/acc) and Techno-Optimism

The e/acc movement gained traction in October 2022, started by two pseudonymous Twitter users known as Beff Jezos and bayeslord.

The movement, a satirical spin on Effective Altruism, gained momentum with the rise in discussions around AI safety, particularly at the beginning of 2023.

In contrast, e/acc represents a fundamentally different viewpoint. Rooted in techno-optimism, e/acc is a burgeoning movement that embraces the transformative potential of AI.

It posits that accelerating technological progress, particularly in AI, is not just beneficial but essential for humanity's advancement.

This school of thought views AI as a pivotal force in propelling human civilisation forward – potentially aiding in everything from solving complex global challenges like climate change to achieving interstellar exploration.

At the heart of the e/acc ethos is the idea of humanity ascending and accelerating on the Kardashev scale, a measure of a civilisation's technological advancement based on its energy usage.

Developed by astrophysicist Nikolai Kardashev, the scale ranges from a Type I civilisation that harnesses energy at the planetary level, to a Type III civilisation that controls energy on a galactic scale, reflecting a trajectory of exponential technological growth and cosmic exploration.

The Techno-Optimist Perspective

Techno-optimists, which is another name for e/acc, argue that while risks exist, they are outweighed by the immense potential benefits of AI.

They advocate for a more liberal approach to AI development, one that encourages innovation and experimentation.

This perspective is not blind to the potential pitfalls of AI; rather, it suggests that the best way to navigate these challenges is through rapid advancement and learning.

The belief is that through accelerated development, AI systems can evolve to manage and mitigate risks more effectively.

This approach aligns with a broader philosophy that sees technology as a key driver of human progress, advocating for a future where AI not only augments human capabilities but also opens up new horizons for humanity.

Recently there have been a number of prominent VC’s and tech leaders that have embraced the e/acc and techno optimist movement and are aligned with its ethos. These include Marc Andreessen from Andreessen Horowitz who wrote the techno optimist manifesto and Garry Tan (President and CEO of YC).

There are other prominent tech leaders and thinkers who are seen as aligned to e/acc including Balaji Srinivasan, Peter Thiel, Elon Musk and Vinod Khosla.

The Rise of Cultural Wars in AI Development

As these ideological differences become more pronounced, we will very likely witness the emergence of cultural wars within the realm of AI development.

Some of this has already started to take place on X/Twitter in the last few days.

On one side are the proponents of the EA-influenced narratives, advocating for a controlled and measured approach to AI. On the other side are the supporters of the e/acc philosophy, pushing for a more liberated and ambitious approach to harnessing AI's potential.

This division is not just philosophical; it has practical implications for how AI is developed, deployed, and governed in enterprises.

The cultural war in AI echoes broader societal debates around technology and progress. It's a struggle between fear and ambition, control and freedom, caution and daring.

Enterprises will find themselves at the crossroads of this ideological battle, forced to choose which path to follow.

The decisions made today will shape not just the future of individual companies but the trajectory of AI development as a whole.

Rethinking Enterprise AI: Beyond Fear and Control

The Ambiguity and Vagueness of AI Narratives

Responsible AI: This term is often presented as a catch-all for AI development and usage that is ethical, accountable, and fair. However, the lack of a clear, universally accepted definition of what constitutes "responsibility" in AI leads to varied interpretations and applications across different cultural and organisational contexts. Meta just announced that they were disbanding their Responsible AI team.

AI Safety: Conceptually, AI Safety is about preventing AI systems from causing unintended harm. Yet, what is considered “safe” can be subjective, influenced by the differing priorities and risk tolerances of stakeholders, leading to inconsistent safety benchmarks.

AI Alignment: The idea of aligning AI with human values is problematic due to the immense diversity in human beliefs and values. The concept raises critical questions about whose values are represented and how they are chosen, leading to potential biases and ethical dilemmas.

Critiques and Challenges

Overemphasis on Risk Aversion: The dominant narratives in Responsible AI and AI Safety often prioritise avoiding harm and mitigating risks, sometimes at the expense of innovation and progress. This conservative approach can lead to a form of risk-averse paralysis, where the fear of potential negative outcomes hinders the exploration of AI's full potential.

The Problem of Singular Alignment: Aligning AI with a specific set of values or ideals can be seen as imposing a monolithic perspective on AI development. This is especially concerning considering the rich diversity of human cultures and belief systems. A singular alignment risks neglecting this diversity and could lead to a form of intellectual imperialism.

Centralised Control vs. Decentralisation: The EA-influenced approach to AI, resembling a ‘Hobbesian Leviathan’, advocates for a centralised control over AI alignment and ethics. This perspective is in contrast with the e/acc view, which argues for a decentralised, diverse ecosystem of AI systems. A centralised model risks stagnation and potential misuse of power, while decentralisation promotes a dynamic and adaptive AI landscape.

Legal and Ethical Overlaps: Many areas of concern in AI, such as privacy, misinformation, and security, are already covered by existing legal frameworks. Adding AI-specific regulations might create unnecessary burdens on AI creators, similar to how the internet has thrived under a balanced regulatory environment.

Diverse Human Intelligence as a Model: Humans, with their diverse alignments and values, coexist within frameworks of laws and social norms. This diversity is managed not by imposing a singular value system but through legal and societal structures that allow a range of beliefs and behaviours. AI development could take a cue from this human model, allowing for a variety of AI systems with different alignments, checked and balanced within a broader legal and ethical framework.

Proposing a New Framework for AI Development in Enterprises

In the wake of the ideological clashes exemplified by the OpenAI saga and the growing divide between EA and e/acc, there's a need for a fresh perspective in enterprise AI.

This new paradigm, which I call "Aspirational AI," focuses on harnessing AI's potential to drive human progress while acknowledging and managing its risks in a pragmatic manner.

This framework moves beyond the fear-driven narratives and control-oriented approaches that have dominated the discourse, proposing a more balanced and forward-thinking strategy for AI development.

Emphasising Progress and Co-Evolution

Aspirational AI is grounded in the belief that AI can and should be a catalyst for extraordinary human advancement.

This approach encourages enterprises to view AI not just as a tool for incremental improvements or risk management, but as a partner in driving transformative change.

It emphasises the co-evolution of AI and humanity, where both progress together, learning from and adapting to each other.

This co-evolutionary perspective suggests that as AI develops, it can help us solve complex problems, unlock new opportunities, and even assist in addressing challenges like climate change or space exploration.

In this framework, AI development is not a race to the bottom in terms of risk aversion but a journey towards maximising potential and benefit.

Enterprises adopting Aspirational AI would prioritise projects that promise significant leaps in innovation, societal benefit, and human advancement.

Implementing Pragmatic Safeguards

While Aspirational AI is ambitious in its vision, it is also grounded in practicality.

It recognises the importance of safeguards but advocates for them to be pragmatic rather than restrictive.

These safeguards are akin to the guardrails in society – they provide necessary protection but do not impede progress. They are designed to evolve along with AI, ensuring that safety measures are as dynamic and adaptable as the technology they regulate.

In this model, enterprises would implement safeguards that are flexible, context-sensitive, and responsive to the rapidly changing AI landscape.

This could include adaptive ethical guidelines, real-time monitoring systems, and responsive governance structures that can quickly adjust to new developments and insights.

The concept of pragmatic safeguards in the context of Aspirational AI aligns with the idea of using existing legal and regulatory frameworks to manage potential risks associated with AI, without imposing excessive restrictions that could stifle innovation.

This approach recognises that many potential AI risks overlap with areas already covered by national security and other legal apparatuses. Here's how this could be applied in specific areas:

Biological Pathogens

AI in Biotech: AI's role in biotechnology could potentially lead to risks like the creation or modification of biological pathogens.

Existing Legal Frameworks: These risks are already governed by stringent biosecurity laws and international treaties. AI's involvement in biotech would fall under these existing regulations, ensuring that any AI-related activities comply with established biosecurity standards.

Cybersecurity

AI-Enhanced Cyber Threats: AI technologies can amplify cybersecurity threats, including sophisticated hacking techniques or AI-powered cyber attacks.

Utilising Cybersecurity Laws: Nations have established cybersecurity laws and frameworks to counter such threats. These can be adapted to include AI-specific provisions, ensuring a robust defense against AI-enhanced cyber threats without necessitating entirely new regulations.

Deepfakes

Manipulation and Misinformation: AI-generated deepfakes pose significant risks in spreading misinformation and manipulating digital content.

Applying Existing Laws: Laws related to fraud, intellectual property, and digital rights can be extended to cover deepfakes. This approach leverages current legal structures to address the misuse of AI in creating deceptive media.

General Principles for Pragmatic Safeguards

Flexibility: Safeguards should be adaptable, able to respond to the evolving capabilities and applications of AI technology.

Context-Sensitivity: The application of safeguards must consider the specific context and potential impact of each AI technology or application.

Responsive Governance: Regulatory bodies and enterprises should establish mechanisms for ongoing assessment and revision of AI-related policies, ensuring they remain relevant and effective.

Co-Evolution: Just as society co-evolves with technological advancements, so too should our legal and regulatory frameworks evolve alongside AI, ensuring they remain effective without hampering innovation.

Precedent of Internet Regulation: The internet serves as a precedent, demonstrating how society can benefit from a technology while managing its risks through adaptive regulation rather than restrictive controls.

Aspirational AI: Steering Towards Human Advancement

In the dialogue surrounding AI, Aspirational AI forges ahead, not merely balancing but actively favouring a vision of progress and human advancement.

It's a response to the cautious restraint of Effective Altruism and a reinforcement of the bold optimism championed by Effective Accelerationism.

This approach is about leveraging AI to its fullest potential, with a deliberate tilt towards technological aspiration over precaution.

Enterprises must embrace Aspirational AI, advocating for technological progression while judiciously managing risks. This strategy demands:

Reassessing 'Responsible AI' Initiatives: Review and realign your Responsible AI practices to marry ethical rigour with a commitment to innovation.

Navigating Cultural Shifts: Harmonise safety concerns with the pursuit of technological breakthroughs, positioning AI as a catalyst for progress rather than a peril.

Adaptive Safeguards: Craft safeguards that are flexible and evolve with AI advancements, supporting innovation and potentially involving regulatory engagement for more adaptable guidelines.

Cultivating a Progress-Driven Culture: Foster a corporate ethos that values aspirational objectives, utilising AI as an instrument for societal betterment and establishing your enterprise as an industry leader.