The Hidden AI Landgrab: How AI Datacenters Are Becoming the New Battleground

Tracking the Trillion-Dollar Rush for Datacenters, GPUs, and Energy Infrastructure

“The concrete piers in the ground were like seeds, scattered on the winds of time, each landing where it might, to root in history’s cracks and grow something new. No one saw the machines coming, not at first—just the dust rising where the past had been, and the hum of something inevitable.” - Grok 3 channeling William Gibson

Welcome back to The Uncharted Algorithm. In our earlier explorations we have wandered the exciting frontiers of AI algorithms and enterprise disruption.

This time, we’re zooming in on something far more concrete - literally.

Today we uncover the hidden AI landgrab, a covert rush for physical infrastructure that’s turning datacenters into the new battleground for tech giants.

Grab your hard hat and shovel; we’re digging in!

The Secret Gold Rush Beneath Our Feet

In the early days of the internet, power belonged to those with the best code or the most data. But now, an AI gold rush is underway, and its prospectors are after land, steel, and silicon. Around the world, massive datacenters are quietly materializing in unlikely places.

A desert patch in Arizona, once slated for a generic warehouse park, is being repurposed into a 122-acre, 400-megawatt “AI fortress” with three giant server buildings1. Nearby, another 400-acre site not far from TSMC’s new chip fabs is undergoing a mysterious conversion – local records show rezoning for “industrial use,” but upcoming research shows its destined to become an AI compute hub2.

And it’s not just Arizona: from the outskirts of Dublin to the outskirts of Singapore, land deals and construction permits hint at global expansion of AI infrastructure.

The key word here is quiet. Tech companies aren’t exactly issuing press releases that they’re gobbling up real estate for datacenters. These projects often run under code names or through shell companies to avoid attention. But if you follow the faint signals – a zoning application here, a power substation upgrade there – a pattern emerges.

This is what inspired me to build a new upcoming piece of research intelligence called AI DataCenter Signals Intelligence Report, piecing together breadcrumbs of evidence. The findings are striking: a new landgrab is unfolding, largely under the radar.

Why all the secrecy? In an arms race, you don’t reveal your next missile silo. These AI supercomputing datacenters give whoever controls them a massive advantage – the ability to train more advanced models, process more data, and deliver smarter services. Every additional acre and megawatt secured could translate to a leap in AI capability.

So companies move in shadow: acquiring cheap land in remote areas, or leasing entire buildings near key fiber routes and power grids before competitors even know they’re available.

It’s a strategic landgrab reminiscent of the 19th-century railroad expansion, only now the railroads are rows of server racks humming with artificial brains.

Yet traces inevitably surface. Consider Phoenix, AZ: so many datacenters have sprung up around its periphery that the city recently debated limits on new builds3. Officials cited how these behemoths devour huge tracts of land and electricity while creating relatively few jobs – a trade-off not lost on local communities. Even so, the expansion continues, shifting to friendlier locales or skirting city limits. The hunger for infrastructure is simply too great to slow down.

Clues in the Supply Chain: GPUs, Coolants, and Concrete

It’s not just land that’s being snapped up. To understand the battleground, follow the supply lines feeding it. An AI datacenter isn’t much use without the hardware inside – thousands of cutting-edge GPUs, power systems, cooling systems, and energy grids. And here we find another covert front in the war: the race to secure components.

At the center of this is NVIDIA. Their high-end GPUs are the brains behind most AI workloads, and demand has exploded. How crucial is NVIDIA? Consider this: when NVIDIA’s next-generation “Blackwell” AI chips hit a production delay, pushing launch to early 2025, it sent shockwaves through the industry.

Suddenly, every tech giant’s secret timeline for upgrading their datacenters had to be recalibrated. Some responded by stockpiling current generation chips – and as per our analysis NVIDIA has amassed a $2 billion inventory buffer4 to help partners bridge the gap. In the old days, software engineers fretted over code releases; now CTOs fret over chip delivery dates. The power dynamics are shifting: a chip supplier’s roadmap can dictate the pace of the entire AI field.

Meanwhile, hyperscalers (the likes of Amazon, Microsoft, Google, Meta) aren’t sitting idle. They’ve read the writing on the wall and are pursuing vertical integration to lessen their dependence on any one supplier. Many of them are designing their own AI chips in-house – for example, Meta and AWS have been co-developing custom “edge AI” chiplets to reduce reliance on NVIDIA5.

Microsoft, on the other hand, is pouring resources into gigantic next-gen datacenter campuses in collaboration with Open AI, also known as “Project Stargate”. As per our signals intelligence Project Stargate will be packing as much as 5 GW power capacity6 – essentially building their own power plants to feed AI computations. These maneuvers are the modern equivalent of forging your own weapons and securing your own energy supply before heading into battle.

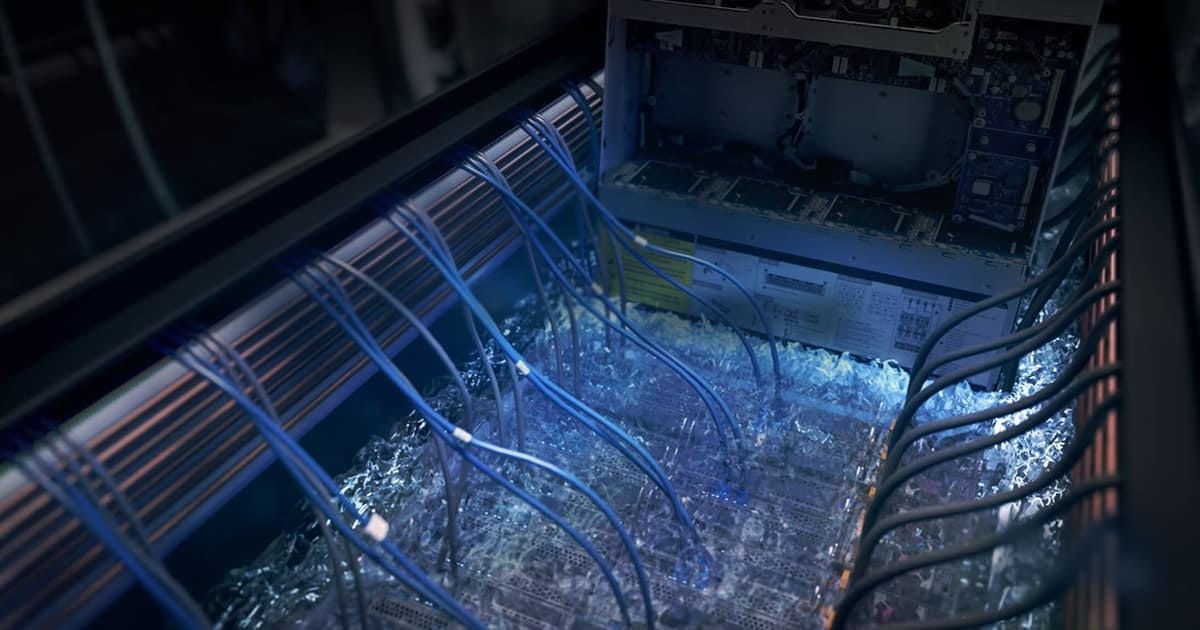

Today’s AI supercomputers guzzle electricity and spit out heat at levels that would fry yesterday’s server farms. A single rack of state-of-the-art AI servers can draw between 30-100 kilowatts – which at its peak can power much as a hundred homes. Traditional cooling solutions or air-conditioning just can’t cut it anymore. So a new cooling arms race has emerged.

One leading player, Vertiv, has developed specialized liquid immersion tanks to dunk servers in cooling fluid, allowing a single multi-rack system to run at 400 kW and beyond7. There are also systems which use two-phase immersion cooling which can deliver 200kW per rack with future projections for higher capacities8.

The adoption of immersion cooling is skyrocketing: industry data (and our intel gathering) show over 1,200 of these tank units being deployed per month by Q3 2025 to keep up with the heat output of advanced AI chips9.

Picture warehouse-sized rooms, not with rows of fans, but with rows of sealed tubs gurgling with coolant and servers – the datacenters of the future sound more like brewing factories!

Securing enough power and coolant has become as important as securing the chips themselves. Supply chain signals show unusual spikes in orders for things like industrial chillers, backup generators, and even rail tankers filled with coolant chemicals.

And don’t forget the basic building blocks: concrete and steel. Construction firms that specialize in datacenters have backlogs stretching years out. One top contractor likened the current demand to a “Data Center Manhattan Project” – a frantic, all-hands push to build, build, build, often under NDAs for clients who refuse to be named. Each new build is a chess move on the global board.

Behind all of this, another drama plays out in the silicon foundries and chip packaging houses. Companies like TSMC (which manufactures the advanced chips for NVIDIA and others) are racing to expand their capacity for advanced chip packaging by an astounding 470% year-on-year.

This specialized process, needed to stitch AI chips together with high-bandwidth memory, became a surprise bottleneck recently – you can have all the silicon wafers you want, but if you can’t package them into modules fast enough, they’re just expensive pieces of crystal.

In response, TSMC is effectively printing money to enlarge this pipeline, our report notes a target of 660,000 wafer packages by 202510.

Still, demand might outstrip even that aggressive expansion, which means some players could find themselves waiting in line for these chips (or ammunition). In an arms race, a supply choke-point is deadly, and everyone knows it.

Power Plays: Energy and Influence

There’s another kind of power in play – the electric kind. If data is the new oil, then electricity is the new lifeblood of tech empires. These AI facilities consume mind-boggling amounts of energy, so securing reliable, cheap power has become a strategic imperative. It’s no coincidence that many new datacenters are sprouting near big power lines or renewable energy projects. In fact, energy companies are now quietly part of the landgrab coalition. For example, utility giants like Duke and Dominion are upgrading grids and building new substations to handle the load, investing over $10 billion in grid modernization efforts in hotspot regions11.

If data is the new oil, then electricity is the new lifeblood of tech empires

Wind farm and solar park deals are being inked left and right to feed the hungry AI beasts – a 3 gigawatt wind project in the North Sea here, a hybrid solar-wind farm in Germany there12. The datacenter boom is increasingly dictating where and how new energy infrastructure is deployed.

This convergence of tech and energy is shifting power dynamics in a very literal sense. Cities and regions that attract AI datacenters gain clout (and tax revenue), but they also inherit the stress on their grids and water resources. Nations are starting to treat AI infrastructure as critical assets – even matters of national security.

We’re seeing early signs of “sovereign AI infrastructure” initiatives: for instance, the U.S. Department of Defense is planning its own secured AI compute installations on military bases, ensuring that certain AI capabilities run on domestic soil13. Other countries are surely not far behind, if not already doing it covertly. After all, if AI is to be the engine of economic and military might, you want control over the engine room.

And what about the smaller players? In past issues, we often mused about how a clever startup with a smart algorithm could topple giants. But in this new landscape, the barriers to entry are rising. If cutting-edge AI requires access to a cluster of tens of thousands of GPUs and terawatts of power, the garage startup won’t be building that in their backyard. This could consolidate power in the hands of the few who already have deep pockets and infrastructure prowess – unless new paradigms (like decentralized computing or cloud cooperatives) emerge to counterbalance it.

It’s a theme to watch: will the future of AI be an oligopoly controlled by those who won the landgrab? Or will new innovations open-source and distribute this power? The jury’s out, but the current trajectory points to heavier concentration of power (both computational and corporate).

Why This Landgrab Matters (and What’s Next)

All of this might sound a bit alarming, or at least awe-inspiring. Vast secretive datacenters, shadow supply chains for GPUs and coolant, utility companies retooling grids for AI – it reads like a technothriller.

But it’s really happening, and it matters for everyone tracking the future of AI. The capabilities of AI systems we’ll see in the next few years – the breakthroughs in what they can do – will be directly tied to this underbelly of infrastructure. When you hear about a new AI model that can do incredible things, ask yourself: where was it trained, and on whose datacenter? The winners of the AI race may be determined as much by where and on what they run their algorithms as by the algorithms themselves.

For me personally, uncovering this hidden landgrab has been a journey of its own. I’ve teased some of the findings here in broad strokes – enough to reveal the big picture without spilling all the secrets.

In the upcoming AI DataCenter Signals Intelligence Report, we’ll dive deep into the specifics: which companies are acquiring land (and how much), the timeline of GPU shipments and shortages, who’s investing in cooling tech, and how supply chain bottlenecks are being (or not being) resolved. We’ll name key players – from the familiar Big Tech titans to the unsung heroes enabling them (ever heard of Vertiv or GRC? You will) – and we’ll map out the global hotspots of AI infrastructure expansion with hard data and analytics.

Consider this newsletter a map’s legend, preparing you to read the full map that’s coming. By now, you’re among the savvy who know that behind every Claude, Chat GPT, or DeepSeek lies a football-field-sized buildings roaring with fans (or bubbling with fluid) and drawing megawatts from the grid. It’s a hidden world, but one that’s increasingly defining our world. And like any landgrab, fortunes and futures will be made or lost on the outcome.

Stay tuned for the full report – it promises to shine light on these shadowy machinations in unprecedented detail. The next time you drive by a mysterious new building with heavy security and no company sign, you might just be witnessing the latest move in The Hidden AI Landgrab.

After all, the battleground of the 21st century isn’t only online or in labs; it’s on the very real, very physical ground – data center by data center, megawatt by megawatt. And understanding this will be crucial for anyone seeking to chart the future of AI.

Thank you for reading the The Uncharted Algorithm. If you found this eye-opening, you won’t want to miss the forthcoming AI DataCenter Signals Intelligence Report – consider it required reading for staying ahead in the AI infrastructure race. As always, we’ll navigate these uncharted territories together, shining a light where few think to look. Stay curious, stay informed, and see you in the next issue.

Sources: Signals intelligence gathered for the AI DataCenter SIGINT Report; excerpts cross-verified from industry data and news.